Using Chinda LLM 4B with OpenWebUI - Complete User Guide

🎯 Introduction

Chinda LLM 4B is an open-source Thai language model developed by the iApp Technology team, capable of thinking and responding in Thai with high accuracy using the latest Qwen3-4B architecture.

OpenWebUI is a beautiful, user-friendly web interface for Ollama that provides ChatGPT-like experience for local AI models. It offers an intuitive chat interface with features like conversation history, model management, and customizable settings.

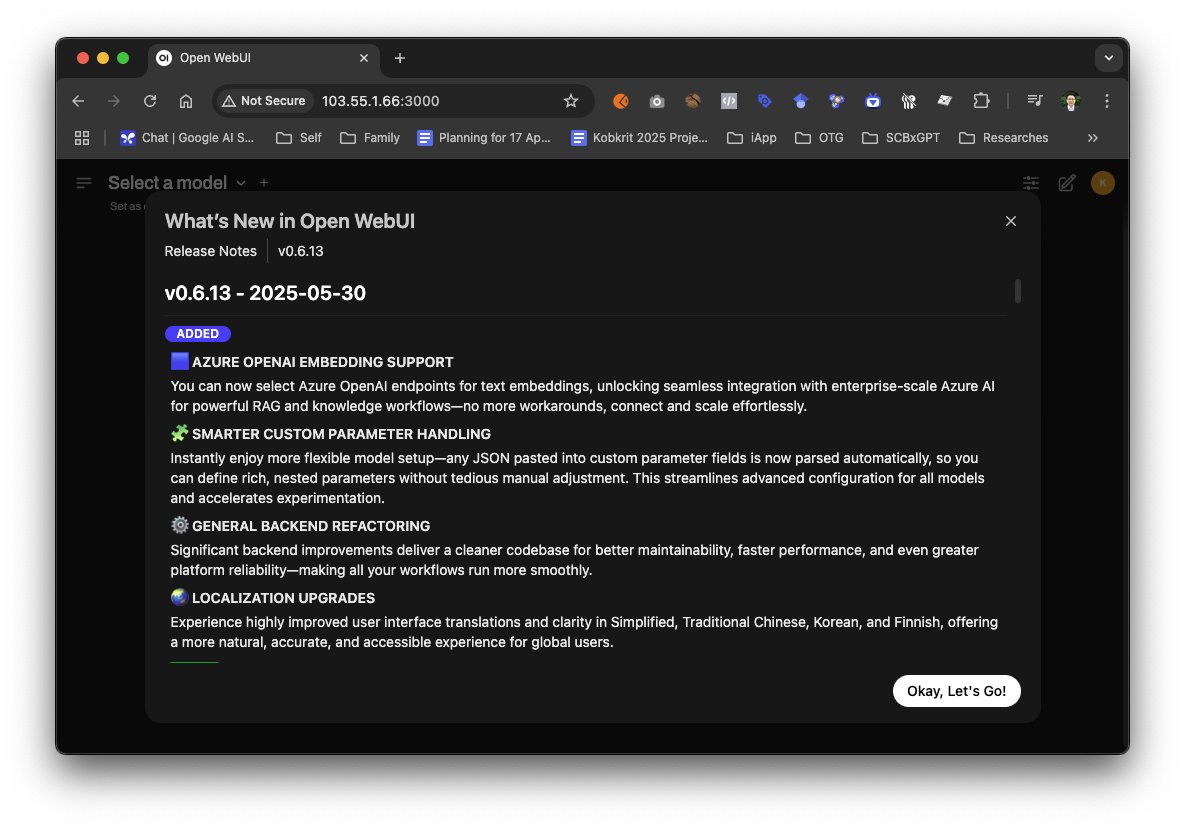

The latest version of OpenWebUI (v0.6.13) includes exciting new features such as Azure OpenAI embedding support, smarter custom parameter handling, general backend refactoring, and improved localization for multiple languages.

🚀 Step 1: Installing Docker

Install Docker

If you don't have Docker installed:

- Windows/Mac: Download from https://www.docker.com/products/docker-desktop

- Linux: Follow your distribution's Docker installation guide

GPU Support Setup (Optional but Recommended)

For NVIDIA GPUs:

Install NVIDIA Container Toolkit to enable GPU acceleration:

Ubuntu/Debian:

# Add NVIDIA package repositories

distribution=$(. /etc/os-release;echo $ID$VERSION_ID)

curl -s -L https://nvidia.github.io/nvidia-docker/gpgkey | sudo apt-key add -

curl -s -L https://nvidia.github.io/nvidia-docker/$distribution/nvidia-docker.list | sudo tee /etc/apt/sources.list.d/nvidia-docker.list

# Install nvidia-container-toolkit

sudo apt-get update && sudo apt-get install -y nvidia-container-toolkit

sudo systemctl restart docker

For other Linux distributions, macOS, or Windows: Follow the NVIDIA Container Toolkit installation guide

📱 Step 2: Running OpenWebUI with Integrated Ollama

Method 1: OpenWebUI with Built-in Ollama (Recommended)

This method runs both OpenWebUI and Ollama in a single container setup:

For systems WITHOUT GPU:

docker run -d -p 3000:8080 -v ollama:/root/.ollama -v open-webui:/app/backend/data --name open-webui --restart always ghcr.io/open-webui/open-webui:ollama

For systems WITH NVIDIA GPU:

docker run -d -p 3000:8080 --gpus all -v ollama:/root/.ollama -v open-webui:/app/backend/data --name open-webui --restart always ghcr.io/open-webui/open-webui:ollama

Method 2: Using Docker Compose (Advanced)

Create a docker-compose.yml file:

version: '3.8'

services:

open-webui:

image: ghcr.io/open-webui/open-webui:ollama

container_name: open-webui

ports:

- "3000:8080"

volumes:

- ollama:/root/.ollama

- open-webui:/app/backend/data

restart: always

# Uncomment the following lines for GPU support

# deploy:

# resources:

# reservations:

# devices:

# - driver: nvidia

# count: all

# capabilities: [gpu]

volumes:

ollama:

open-webui:

Run with:

docker-compose up -d

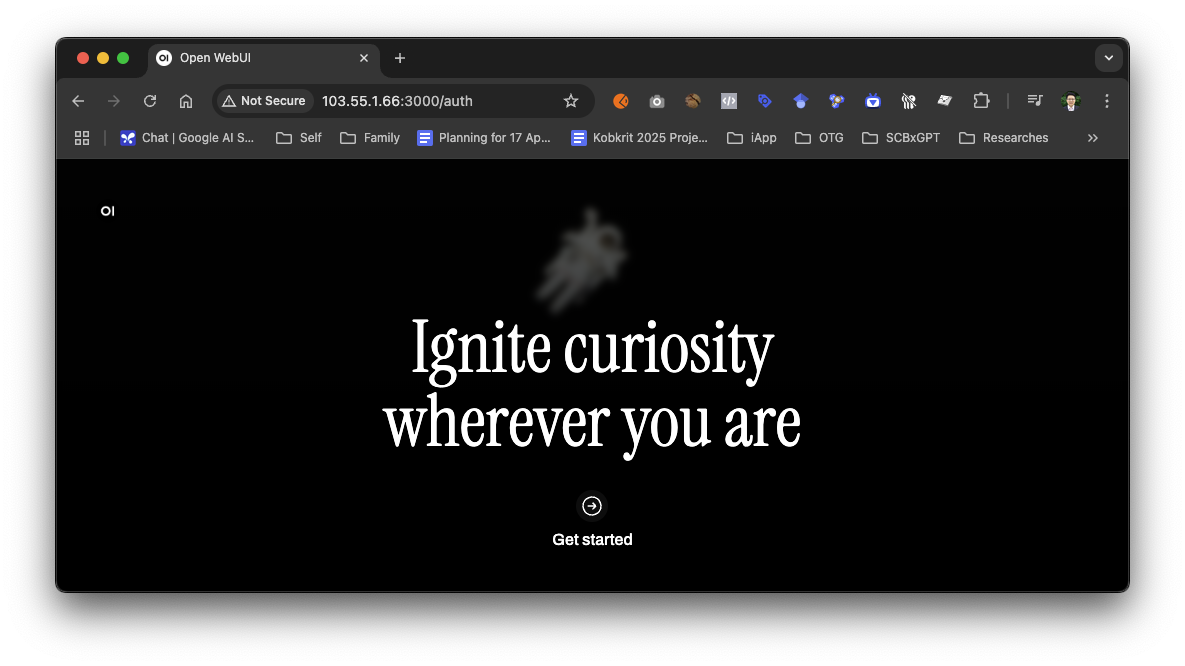

Access OpenWebUI

- Open your web browser

- Go to

http://localhost:3000 - You should see the OpenWebUI welcome screen

🔍 Step 3: Download Chinda LLM 4B Model

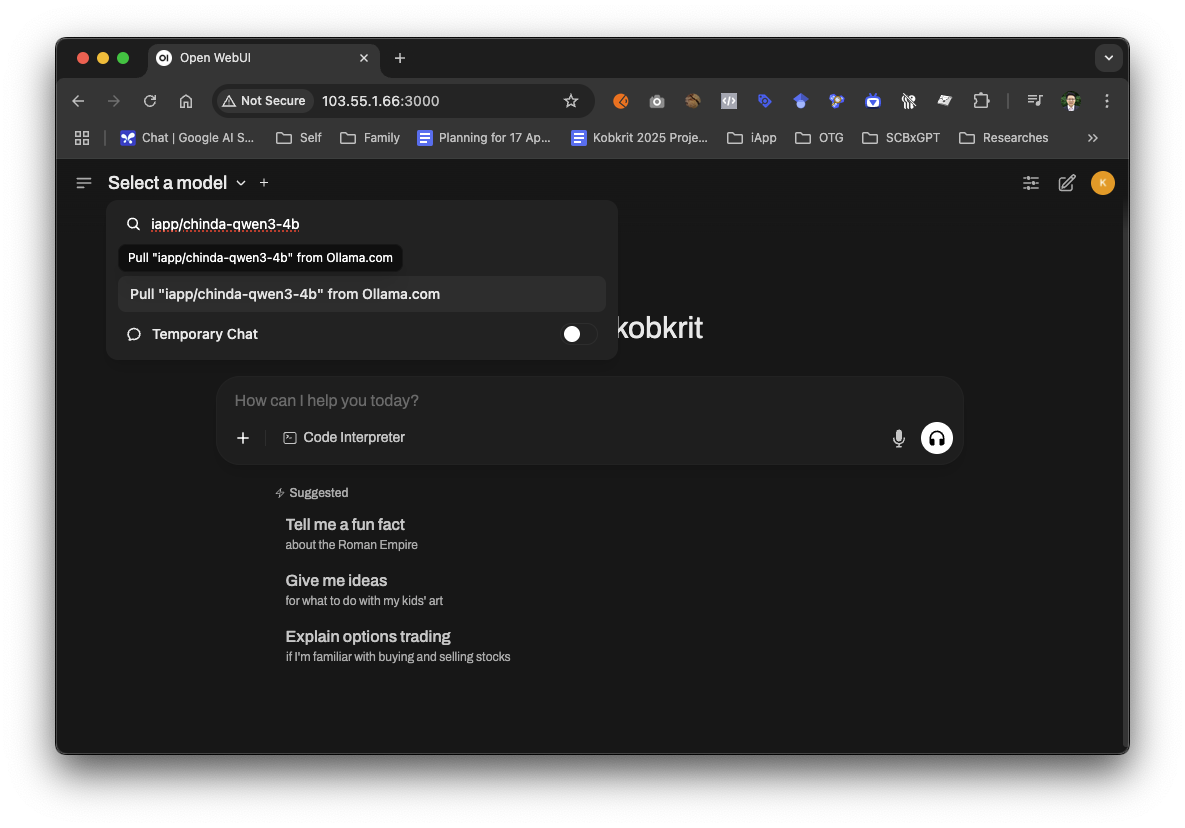

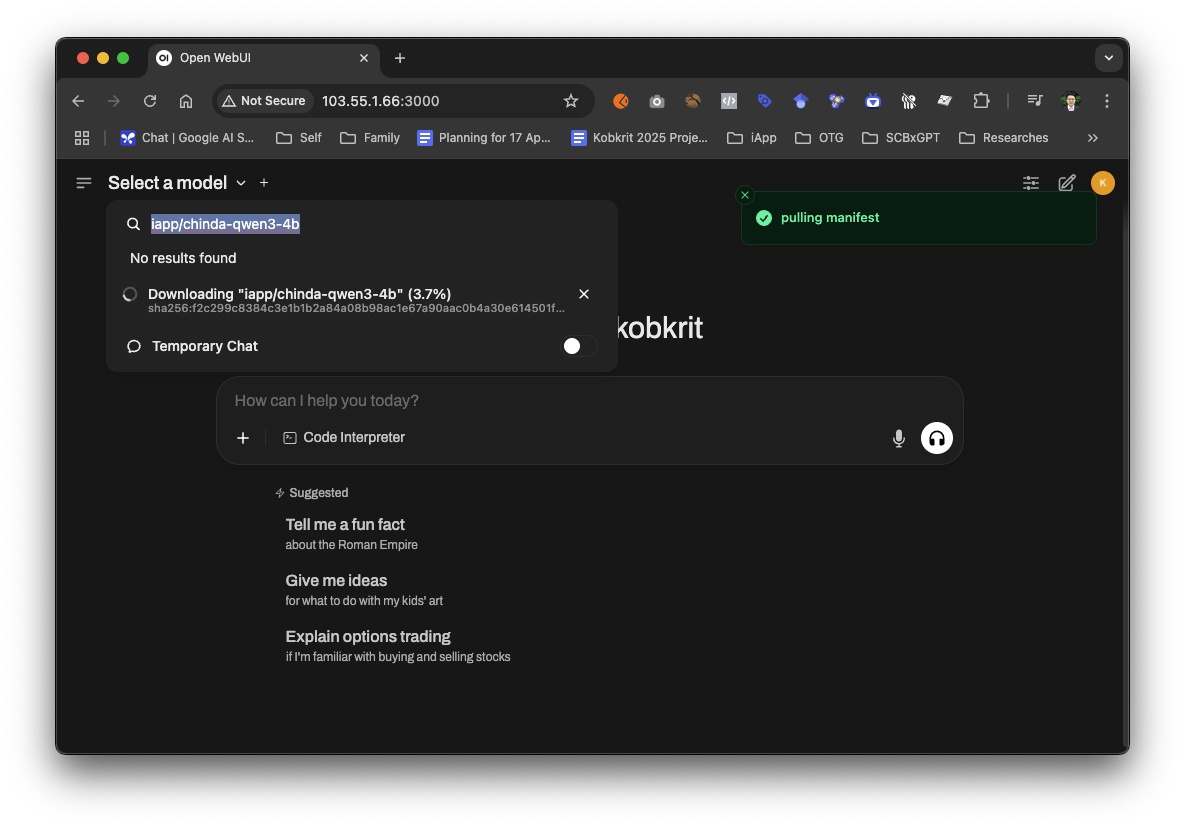

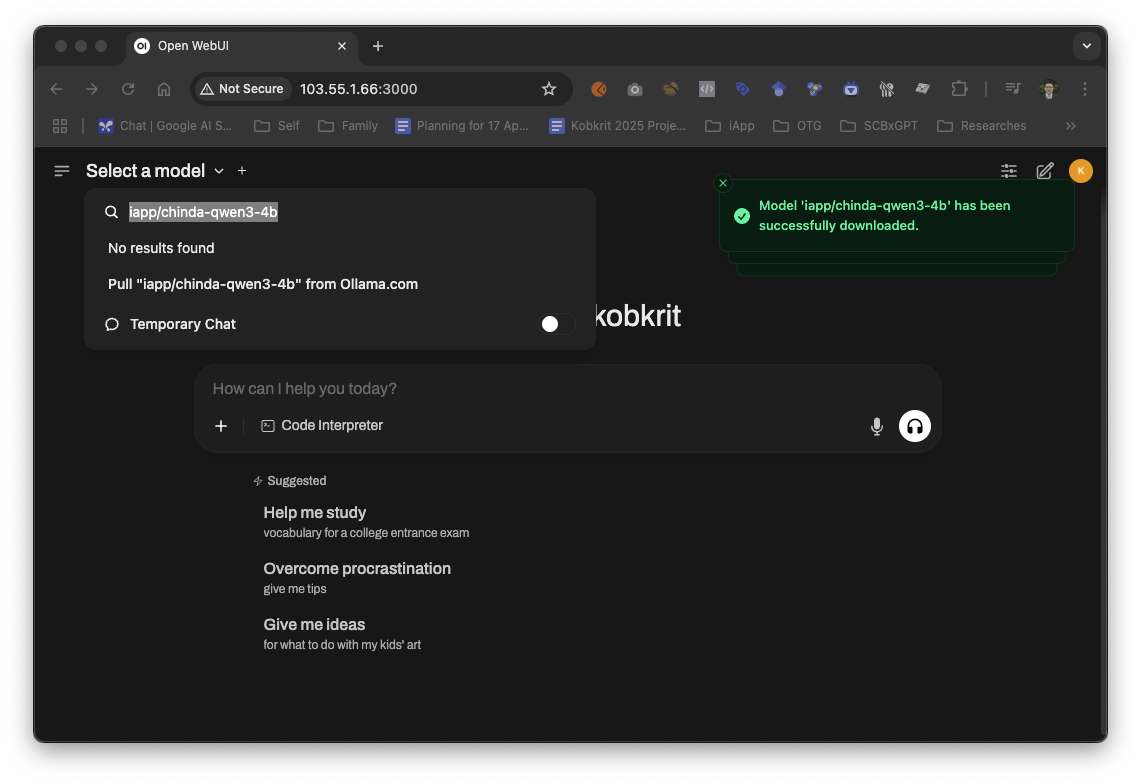

Using OpenWebUI Interface (Recommended)

Once OpenWebUI is running, you can download the Chinda LLM 4B model directly through the web interface:

- Open your browser and go to

http://localhost:3000 - Complete the initial setup (create account)

- Search for the model by typing

iapp/chinda-qwen3-4bin the model search box

- Click Pull "iapp/chinda-qwen3-4b" from Ollama.com

- Wait for the download to complete (approximately 2.5GB)

- You'll see a success notification when the model is downloaded

Using Docker Exec (Alternative)

You can also download the model using Docker exec:

# Download Chinda LLM 4B model

docker exec -it open-webui ollama pull iapp/chinda-qwen3-4b

# Verify the model is downloaded

docker exec -it open-webui ollama list

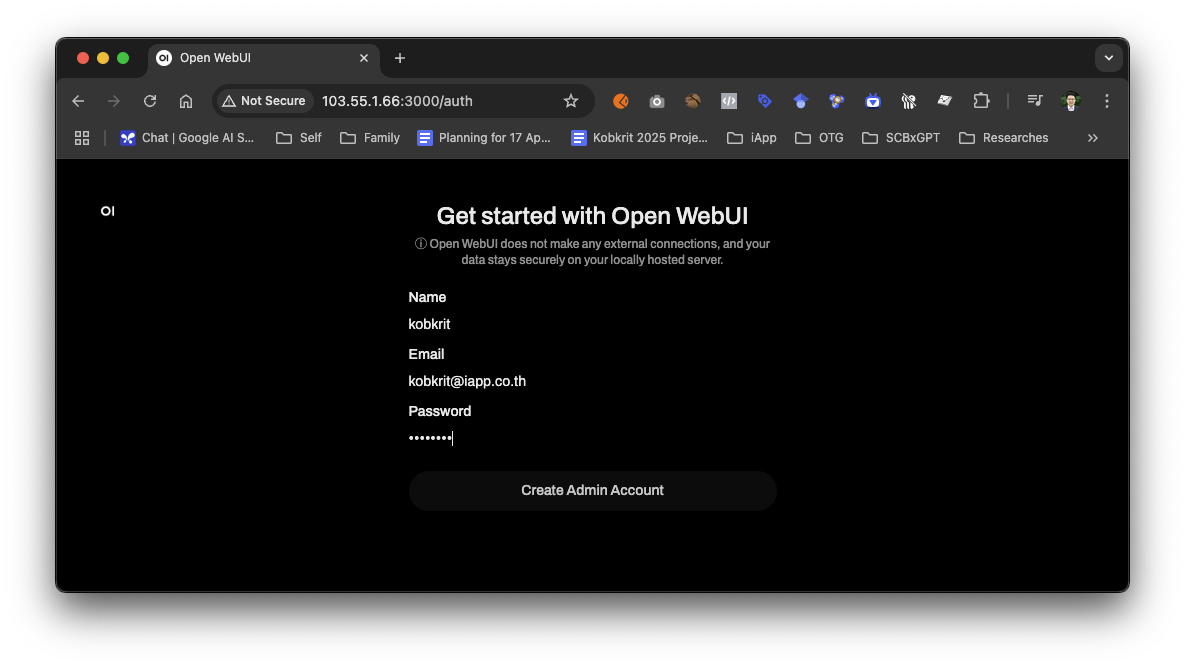

🔍 Step 4: Initial Setup

Create Your Account

- On the first visit, you'll see a Sign Up form

- Enter your preferred email and password

- Click Create Account

- You'll be automatically logged in

Note: This is a local account only for your computer - no data is sent to external servers.

⚙️ Step 5: Using Chinda LLM 4B

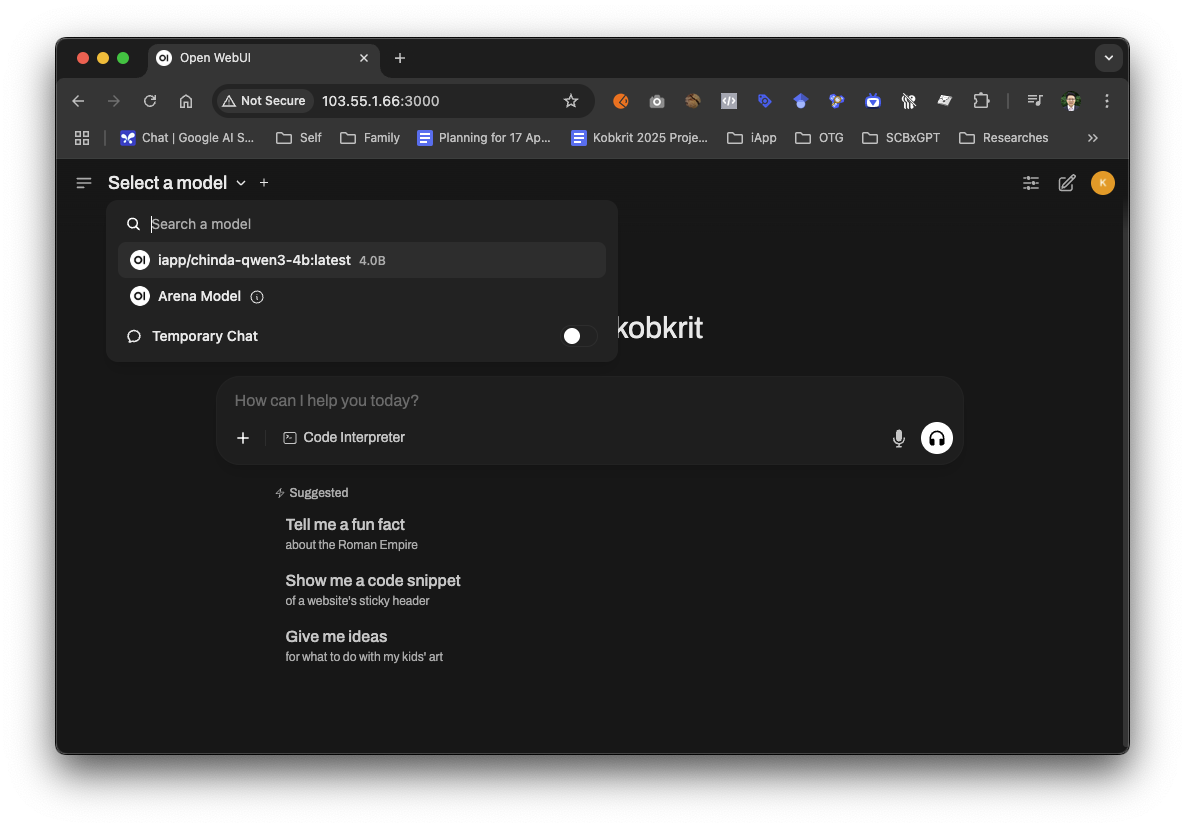

Select the Model

- In the chat interface, look for the Model dropdown at the top

- Select

chinda-qwen3-4b:latestfrom the list - If you don't see it, make sure you've downloaded the model using the steps above

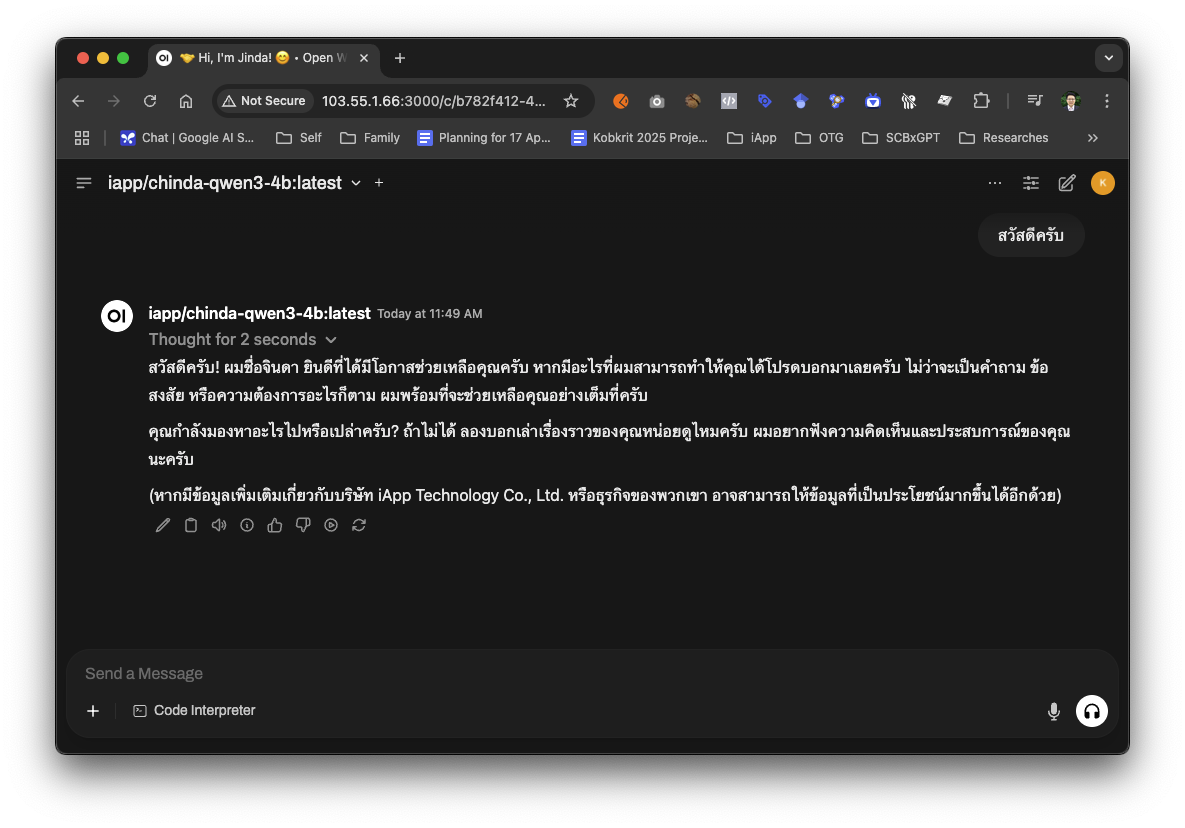

Start Your First Conversation

- Type your message in Thai in the chat box

- Press Enter or click the send button

- Chinda LLM will respond in Thai

Example Conversations:

As you can see in the screenshot above, Chinda LLM responds naturally in Thai and introduces itself as an AI assistant developed by iApp Technology Co., Ltd. The model demonstrates its capability to understand context and provide thoughtful responses in Thai.

Mathematics Question:

You: ช่วยแก้สมการ 3x + 7 = 22 ให้หน่อย

Chinda: ผมจะช่วยแก้สมการ 3x + 7 = 22 ให้คุณครับ

ขั้นตอนการแก้:

1) 3x + 7 = 22

2) 3x = 22 - 7

3) 3x = 15

4) x = 15 ÷ 3

5) x = 5

ดังนั้น x = 5 ครับ

Document Writing:

You: ช่วยเขียนอีเมลขอบคุณลูกค้าให้หน่อย

Chinda: ผมจะช่วยเขียนอีเมลขอบคุณลูกค้าให้คุณครับ

เรื่อง: ขอบพระคุณสำหรับการใช้บริการ

�เรียน คุณลูกค้าที่เคารพ,

ทางบริษัทฯ ขอขอบพระคุณอย่างสูงสำหรับการให้ความไว้วางใจและใช้บริการของเรา...

🎨 Step 6: Advanced Features

Conversation Management

Create New Chats:

- Click the + button to start a new conversation

- Each conversation is saved separately

- You can switch between conversations anytime

Rename Conversations:

- Click on the conversation title

- Enter a new name to organize your chats

Export Conversations:

- Click on the conversation settings (three dots)

- Select Export to save as JSON or Markdown

Customize Model Settings

Access Model Settings:

- Click the Settings icon next to the model name

- Adjust parameters like:

- Temperature (0.1-1.0): Controls creativity

- Top P (0.1-1.0): Controls response diversity

- Top K (1-100): Limits vocabulary choices

- Max Tokens: Maximum response length

Recommended Settings for Chinda LLM:

- Temperature: 0.7 (balanced creativity)

- Top P: 0.9 (good diversity)

- Top K: 40 (focused responses)

System Prompts

You can set custom system prompts to modify Chinda's behavior:

Example System Prompts:

For Professional Writing:

คุณเป็นผู้ช่วยเขียนเอกสารมืออาชีพ ตอบด้วยภาษาไทยที่เป็นทางการและสุภาพ

For Educational Content:

คุณเป็นครูสอนภาษาไทย อธิบายให้เข้าใจง่าย ใช้ตัวอย่างประกอบ

For Creative Writing:

คุณเป็นนักเขียนที่มีจินตนาการสูง สร้างเนื้อหาที่น่าสนใจและสร้างสรรค์

📊 Step 7: Model Management

View Available Models

- Go to Settings → Models

- See all downloaded models

- Check model sizes and information

Download New Models

Using the container:

# Download new models using docker exec

docker exec -it open-webui ollama pull <model-name>

Or use the OpenWebUI interface: Settings → Models → Pull a model from Ollama.com

Remove Models

# Remove models using docker exec

docker exec -it open-webui ollama rm <model-name>

🔧 Step 8: Troubleshooting

Common Issues and Solutions

Issue: Can't See Chinda Model

Symptoms: Model doesn't appear in the dropdown Solutions:

- Check if container is running:

docker ps - Verify model is downloaded:

docker exec -it open-webui ollama list - Download the model if needed:

docker exec -it open-webui ollama pull iapp/chinda-qwen3-4b - Refresh OpenWebUI page

Issue: "Connection Error" or Container Won't Start

Symptoms: Can't access OpenWebUI or container fails to start Solutions:

- Check if Docker is running:

docker version - Verify container status:

docker ps -a - Check container logs:

docker logs open-webui - Restart the container:

docker restart open-webui

Issue: Slow Responses

Solutions:

- Close unnecessary applications

- Reduce Max Tokens in model settings

- Lower temperature and top_k values

- Ensure sufficient RAM (8GB+ recommended)

Issue: GPU Not Working

Symptoms: Slow performance despite having GPU Solutions:

- Verify NVIDIA drivers:

nvidia-smi - Check NVIDIA Container Toolkit:

docker run --rm --gpus all nvidia/cuda:11.0-base nvidia-smi - Restart container with GPU support:

# Stop existing container

docker stop open-webui

docker rm open-webui

# Run with GPU support

docker run -d -p 3000:8080 --gpus all -v ollama:/root/.ollama -v open-webui:/app/backend/data --name open-webui --restart always ghcr.io/open-webui/open-webui:ollama

Performance Optimization

- Use SSD storage for better model loading

- Allocate sufficient RAM (minimum 8GB)

- Close background applications

- Use lower precision settings if memory is limited

🎯 Suitable Use Cases

✅ What Chinda LLM 4B Does Well in OpenWebUI

- Interactive Conversations - Natural chat experience with conversation history

- Document Drafting - Write and iterate on documents with easy editing

- Educational Content - Learn with saved conversation references

- Creative Writing - Develop stories and content with multiple revisions

- Programming Help - Code generation with syntax highlighting

- Language Translation - Quick Thai-English translations

- Research Assistant - Organize information across multiple chats

🌟 OpenWebUI Specific Advantages

- Beautiful Interface - Clean, modern design like ChatGPT

- Conversation History - All chats are automatically saved

- Multi-Model Support - Switch between different models easily

- Export Options - Save conversations in various formats

- Customizable Settings - Fine-tune model behavior per conversation

- No Internet Required - Complete privacy with local processing

❌ Limitations to Be Aware Of

Don't ask for facts without context such as:

- Latest news events (model training cutoff applies)

- Specific statistical data without sources

- Real-time information

- Information about specific people or current events

Since Chinda LLM 4B is a 4B parameter model, it may generate incorrect information (hallucination) when asked about specific facts.

🚀 Model Specifications

- Size: 2.5GB (quantized)

- Context Window: 40K tokens

- Architecture: Based on Qwen3-4B, optimized for Thai language

- Performance: Fast inference on consumer hardware

- Memory Requirements: Minimum 4GB RAM, recommended 8GB+

- Interface: Modern web-based chat interface

🔮 What's Coming

The iApp Technology team is developing a new, larger model that will be able to answer factual questions more accurately. It is expected to be released soon and will be compatible with OpenWebUI.

📚 Additional Resources

Quick Setup Commands

Without GPU:

# 1. Run OpenWebUI with integrated Ollama

docker run -d -p 3000:8080 -v ollama:/root/.ollama -v open-webui:/app/backend/data --name open-webui --restart always ghcr.io/open-webui/open-webui:ollama

# 2. Open browser to http://localhost:3000

# 3. Download Chinda LLM model (via web interface or command)

docker exec -it open-webui ollama pull iapp/chinda-qwen3-4b

With GPU:

# 1. Run OpenWebUI with GPU support

docker run -d -p 3000:8080 --gpus all -v ollama:/root/.ollama -v open-webui:/app/backend/data --name open-webui --restart always ghcr.io/open-webui/open-webui:ollama

# 2. Open browser to http://localhost:3000

# 3. Download Chinda LLM model

docker exec -it open-webui ollama pull iapp/chinda-qwen3-4b

Useful Keyboard Shortcuts

- Ctrl/Cmd + Enter: Send message

- Ctrl/Cmd + N: New conversation

- Ctrl/Cmd + K: Focus search

- Esc: Cancel current generation

Docker Management Commands

# View container status

docker ps

# Stop OpenWebUI

docker stop open-webui

# Start OpenWebUI

docker start open-webui

# Restart OpenWebUI

docker restart open-webui

# View logs

docker logs open-webui

# Access container shell

docker exec -it open-webui bash

# Check Ollama models inside container

docker exec -it open-webui ollama list

# Update OpenWebUI

docker pull ghcr.io/open-webui/open-webui:ollama

docker stop open-webui

docker rm open-webui

# Then run the installation command again

# Backup volumes (important!)

docker run --rm -v ollama:/source -v $(pwd):/backup alpine tar czf /backup/ollama-backup.tar.gz -C /source .

docker run --rm -v open-webui:/source -v $(pwd):/backup alpine tar czf /backup/openwebui-backup.tar.gz -C /source .

Links and Resources

- 🌐 Demo: https://chindax.iapp.co.th (Choose ChindaLLM 4b)

- 📦 Model Download: https://huggingface.co/iapp/chinda-qwen3-4b

- 🐋 Ollama: https://ollama.com/iapp/chinda-qwen3-4b

- 🌐 OpenWebUI: https://github.com/open-webui/open-webui

- 🏠 Homepage: https://iapp.co.th/openmodels/chinda-opensource-llm

- 📄 License: Apache 2.0 (Available for both commercial and personal use)

🎉 Summary

Chinda LLM 4B with OpenWebUI provides the perfect combination of powerful Thai AI capabilities with a beautiful, user-friendly interface. This setup gives you:

Key Benefits:

- 🎨 Beautiful Interface - Modern web UI similar to ChatGPT

- 💾 Conversation History - Never lose your important chats

- 🔒 Complete Privacy - Everything runs locally on your machine

- 🚀 Easy to Use - No command-line knowledge required

- 🎛️ Customizable - Adjust settings for different use cases

- 📱 Cross-Platform - Works on any device with a web browser

Perfect For:

- Students and Researchers who need organized conversation history

- Writers and Content Creators who want to iterate on ideas

- Business Professionals who need document drafting assistance

- Developers who want a user-friendly interface for AI integration

- Anyone who prefers web interfaces over command-line tools

Start using Chinda LLM 4B with OpenWebUI today and experience the future of Thai AI with the convenience of a modern web interface!

Built with ❤️ by iApp Technology Team - For Thai AI Development