DeepSeek-V3.2 NEW

Powered by DeepSeek AI

Powered by DeepSeek AIWelcome to iApp DeepSeek-V3.2 API, powered by the latest DeepSeek-V3.2 model. This API provides access to one of the most advanced open-source large language models with exceptional reasoning capabilities, competitive performance against GPT-4 and Claude Sonnet, and full OpenAI-compatible interface.

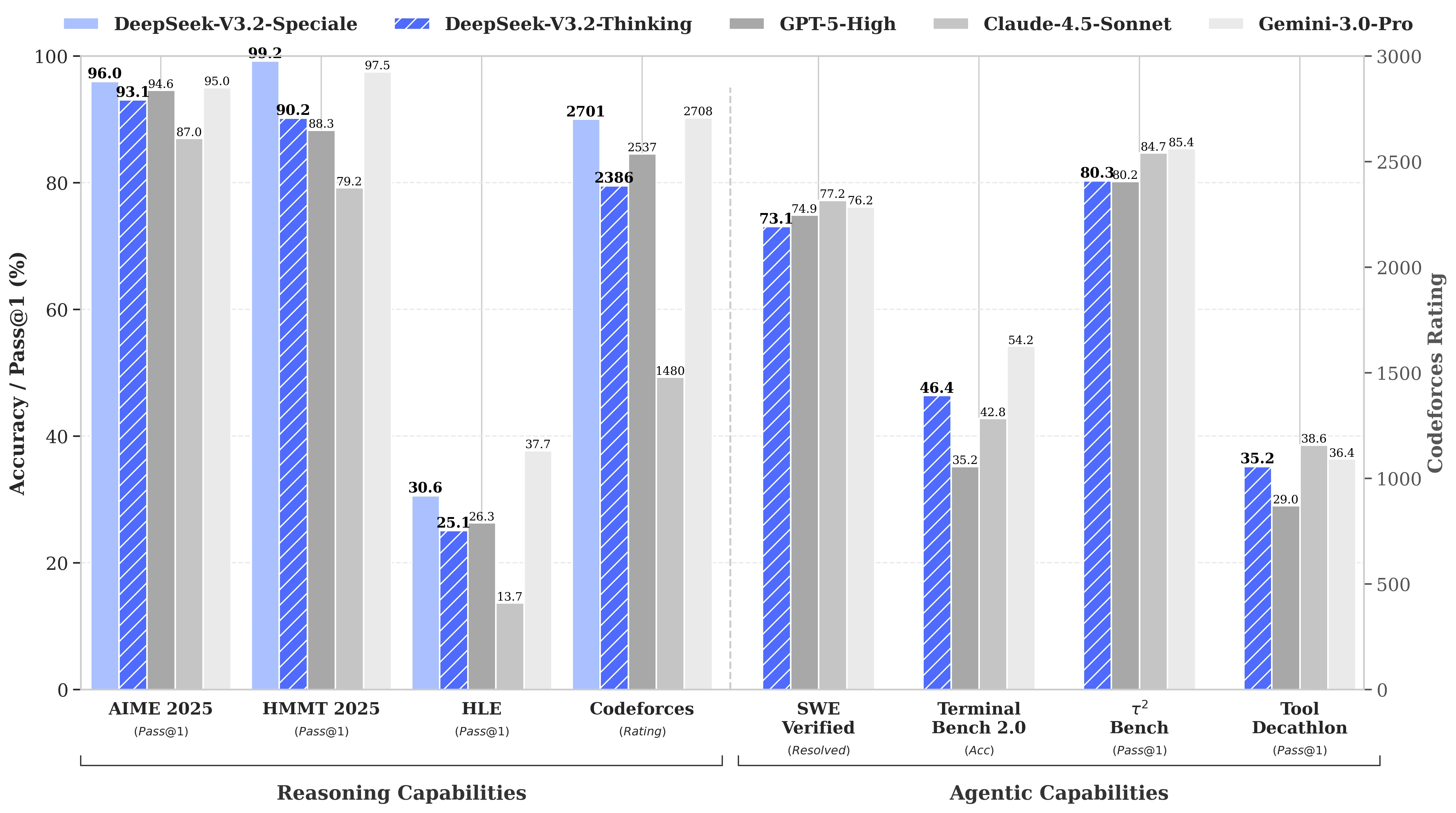

Benchmark Results

DeepSeek-V3.2 delivers competitive performance across multiple benchmarks:

Key Performance Highlights

- 685B Parameters: Massive model with state-of-the-art capabilities

- 128K Context Length: Extended context window for long documents

- Reasoning Mode: Built-in chain-of-thought reasoning with

deepseek-reasonermodel - OpenAI Compatible: Drop-in replacement for OpenAI API

- Gold Medal Performance: Achieves gold-medal level on International Mathematical Olympiad (IMO) and International Olympiad in Informatics (IOI)

Try Demo

Try Our AI Demo

Login or create a free account to use this AI service demo and explore our powerful APIs.

Get 100 Free Credits (IC) when you sign up!

Offer ends December 31, 2025

Start a conversation with the AI assistant

Type your message below and press Enter or click SendOverview

DeepSeek-V3.2 represents an advanced reasoning-focused model designed to harmonize high computational efficiency with superior reasoning and agent performance. It introduces three core innovations:

- DeepSeek Sparse Attention (DSA): An efficient attention mechanism reducing computational complexity while maintaining performance for extended contexts

- Scalable Reinforcement Learning Framework: Post-training approach for enhanced reasoning capabilities

- Agentic Task Synthesis Pipeline: Integrates reasoning capabilities into tool-use scenarios

Key Features

- Advanced Reasoning: Built-in thinking mode with explicit reasoning process

- OpenAI Compatible: Follows OpenAI API format for easy integration

- Streaming Support: Real-time token streaming for responsive applications

- 128K Context: Supports up to 128,000 tokens context length

- Thai & English: Supports both Thai and English languages

- Cost Effective: Competitive pricing for enterprise-grade AI

Getting Started

-

Prerequisites

- An API key from iApp Technology

- Internet connection

-

Quick Start

- Simple REST API interface

- OpenAI-compatible format

- Both streaming and non-streaming support

-

Rate Limits

- 5 requests per second

- 200 requests per minute

Please visit API Key Management page to view your existing API key or request a new one.

API Endpoints

| Endpoint | Method | Description | Cost |

|---|---|---|---|

/v3/llm/deepseek-3p2/chat/completions | POST | Chat completions (streaming & non-streaming) | 0.01 IC/1K input + 0.02 IC/1K output |

Available Models

| Model | Description |

|---|---|

deepseek-reasoner | Thinking mode with explicit reasoning (recommended) |

deepseek-chat | Non-thinking mode for faster responses |

Code Examples

cURL - Non-Streaming

curl -X POST 'https://api.iapp.co.th/v3/llm/deepseek-3p2/chat/completions' \

-H 'apikey: YOUR_API_KEY' \

-H 'Content-Type: application/json' \

-d '{

"model": "deepseek-reasoner",

"messages": [

{"role": "user", "content": "สวัสดีครับ คุณช่วยอะไรได้บ้าง?"}

],

"max_tokens": 4096,

"temperature": 0.7,

"top_p": 0.9

}'

cURL - Streaming

curl -X POST 'https://api.iapp.co.th/v3/llm/deepseek-3p2/chat/completions' \

-H 'apikey: YOUR_API_KEY' \

-H 'Content-Type: application/json' \

-d '{

"model": "deepseek-reasoner",

"messages": [

{"role": "user", "content": "สวัสดีครับ คุณช่วยอะไรได้บ้าง?"}

],

"max_tokens": 4096,

"temperature": 0.7,

"top_p": 0.9,

"stream": true

}'

Response (Non-Streaming with Reasoning)

When using deepseek-reasoner, the response includes a reasoning_content field showing the model's thinking process:

{

"id": "233c1fa8-e6ea-4b4f-86b5-2bbccb39fd9e",

"object": "chat.completion",

"created": 1764635256,

"model": "deepseek-reasoner",

"choices": [

{

"index": 0,

"message": {

"role": "assistant",

"content": "Hello there! How can I assist you today?",

"reasoning_content": "Hmm, the user just greeted me with \"Hello!\". This is a straightforward opening greeting, so I should respond warmly and invitingly. I can start with a cheerful \"Hello there!\" to mirror their friendly tone, then offer my assistance to encourage them to share what they need. Keeping it simple and open-ended works best here since they haven't specified a request yet."

},

"logprobs": null,

"finish_reason": "stop"

}

],

"usage": {

"prompt_tokens": 12,

"completion_tokens": 109,

"total_tokens": 121,

"prompt_tokens_details": {

"cached_tokens": 0

},

"completion_tokens_details": {

"reasoning_tokens": 96

},

"prompt_cache_hit_tokens": 0,

"prompt_cache_miss_tokens": 12

},

"system_fingerprint": "fp_eaab8d114b_prod0820_fp8_kvcache"

}

Python

import requests

import json

url = "https://api.iapp.co.th/v3/llm/deepseek-3p2/chat/completions"

payload = {

"model": "deepseek-reasoner",

"messages": [

{"role": "user", "content": "สวัสดีครับ คุณช่วยอะไรได้บ้าง?"}

],

"max_tokens": 4096,

"temperature": 0.7,

"top_p": 0.9

}

headers = {

'apikey': 'YOUR_API_KEY',

'Content-Type': 'application/json'

}

response = requests.post(url, headers=headers, json=payload)

print(response.json())

Python - Streaming

import requests

import json

url = "https://api.iapp.co.th/v3/llm/deepseek-3p2/chat/completions"

payload = {

"model": "deepseek-reasoner",

"messages": [

{"role": "user", "content": "อธิบายเกี่ยวกับ AI ให้หน่อย"}

],

"max_tokens": 4096,

"temperature": 0.7,

"top_p": 0.9,

"stream": True

}

headers = {

'apikey': 'YOUR_API_KEY',

'Content-Type': 'application/json'

}

response = requests.post(url, headers=headers, json=payload, stream=True)

for line in response.iter_lines():

if line:

line = line.decode('utf-8')

if line.startswith('data: '):

data = line[6:]

if data != '[DONE]':

chunk = json.loads(data)

content = chunk['choices'][0]['delta'].get('content', '')

print(content, end='', flush=True)

JavaScript / Node.js

const axios = require('axios');

const url = 'https://api.iapp.co.th/v3/llm/deepseek-3p2/chat/completions';

const payload = {

model: 'deepseek-reasoner',

messages: [

{ role: 'user', content: 'สวัสดีครับ คุณช่วยอะไรได้บ้าง?' }

],

max_tokens: 4096,

temperature: 0.7,

top_p: 0.9

};

const config = {

headers: {

'apikey': 'YOUR_API_KEY',

'Content-Type': 'application/json'

}

};

axios.post(url, payload, config)

.then(response => {

console.log(response.data.choices[0].message.content);

})

.catch(error => {

console.error(error);

});

PHP

<?php

$curl = curl_init();

$payload = json_encode([

'model' => 'deepseek-reasoner',

'messages' => [

['role' => 'user', 'content' => 'สวัสดีครับ คุณช่วยอะไรได้บ้าง?']

],

'max_tokens' => 4096,

'temperature' => 0.7,

'top_p' => 0.9

]);

curl_setopt_array($curl, [

CURLOPT_URL => 'https://api.iapp.co.th/v3/llm/deepseek-3p2/chat/completions',

CURLOPT_RETURNTRANSFER => true,

CURLOPT_POST => true,

CURLOPT_POSTFIELDS => $payload,

CURLOPT_HTTPHEADER => [

'apikey: YOUR_API_KEY',

'Content-Type: application/json'

],

]);

$response = curl_exec($curl);

curl_close($curl);

$result = json_decode($response, true);

echo $result['choices'][0]['message']['content'];

API Reference

Headers

| Parameter | Type | Required | Description |

|---|---|---|---|

| apikey | String | Yes | Your API key |

| Content-Type | String | Yes | application/json |

Request Body Parameters

| Parameter | Type | Required | Description |

|---|---|---|---|

| model | String | Yes | Model name: deepseek-reasoner or deepseek-chat |

| messages | Array | Yes | Array of message objects with role and content |

| max_tokens | Integer | No | Maximum tokens to generate (default: 4096, max: 128000) |

| stream | Boolean | No | Enable streaming response (default: false) |

| temperature | Float | No | Sampling temperature 0-2 (default: 0.7) |

| top_p | Float | No | Nucleus sampling (default: 0.9) |

Message Object

| Field | Type | Description |

|---|---|---|

| role | String | system, user, or assistant |

| content | String | The message content |

Response Fields

| Field | Type | Description |

|---|---|---|

| content | String | The generated response |

| reasoning_content | String | (deepseek-reasoner only) The reasoning process |

Recommended Temperature Settings

| Use Case | Temperature |

|---|---|

| Coding / Math | 0.0 |

| Data Analysis | 1.0 |

| General Conversation | 1.3 |

| Translation | 1.3 |

| Creative Writing | 1.5 |

Model Information

| Property | Value |

|---|---|

| Model Name | deepseek-reasoner / deepseek-chat |

| Base Model | DeepSeek-V3.2 |

| Parameters | 685B |

| Context Length | 128,000 tokens |

| Languages | Thai, English, Chinese, and more |

| License | MIT |

Use Cases

- Advanced Reasoning: Complex problem-solving, mathematical proofs, logical deduction

- Coding Assistance: Code generation, debugging, code explanation

- Content Generation: Articles, reports, creative writing in multiple languages

- Translation: High-quality translation between Thai, English, and other languages

- Q&A Systems: Intelligent question answering with reasoning capabilities

- Data Analysis: Data interpretation and insights generation

Pricing

| AI API Service Name | Endpoint | IC Cost | On-Premise |

|---|---|---|---|

| DeepSeek-V3.2 [v3.2] | /v3/llm/deepseek-3p2/chat/completions | Input: 0.01 IC / 1K tokens (~10 THB/1M, ~$0.30/1M) | Contact |

Support

For support and questions:

- Discord: Join our community

- Email: support@iapp.co.th

- Documentation: Full API Docs