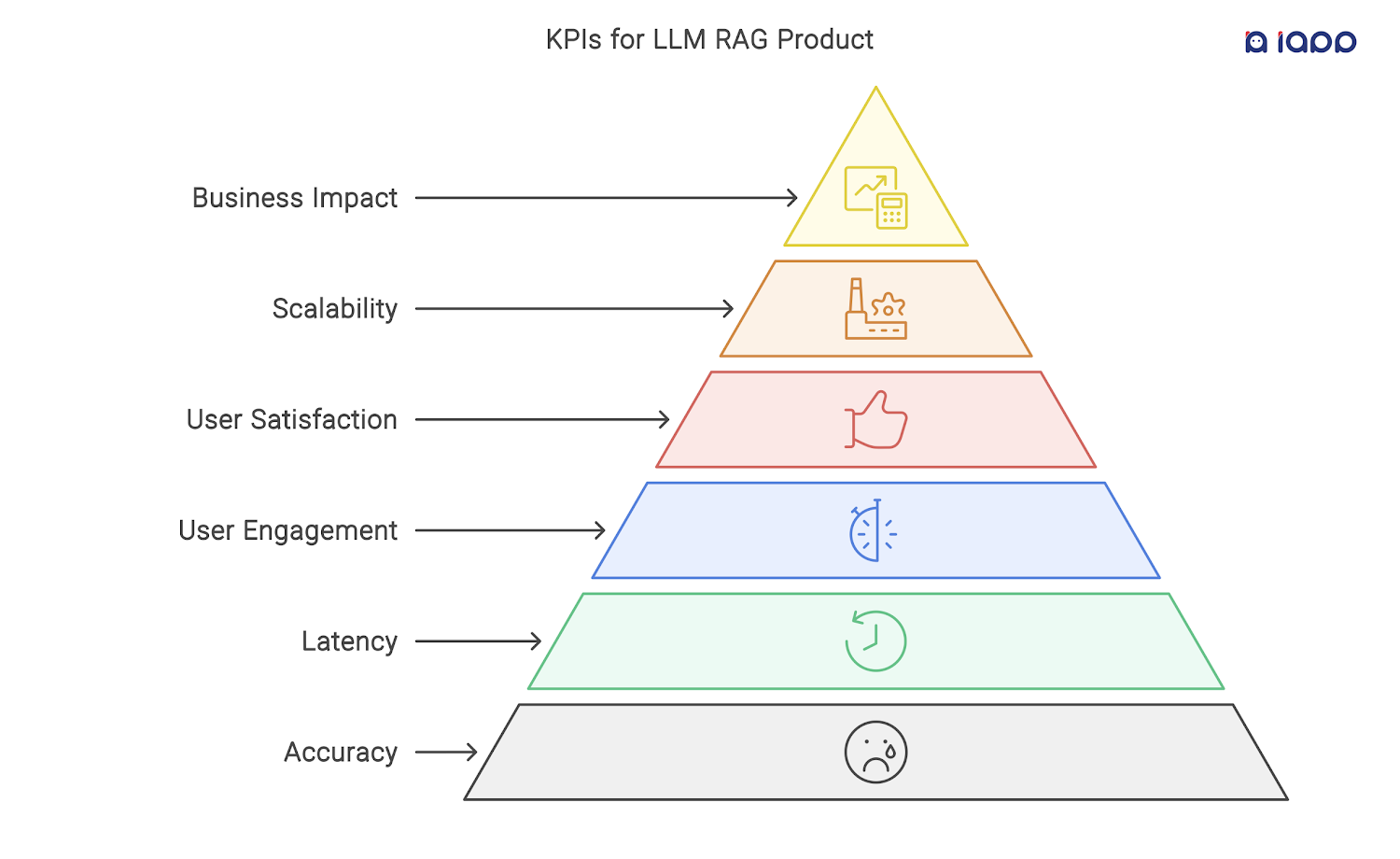

Key Performance Indicators (KPIs) in Evaluating Retrieval-Augmented Generation (RAG) on Large Language Models (LLMs)

1. Accuracy

1.1 Retrieval Accuracy

Definition: Measures the relevance of documents retrieved by the system.

Metrics: Precision@k, Recall@k, F1 Score

Goal: To ensure that the retrieved documents are highly relevant to the search query.

1.2 Generation Accuracy

Definition: Evaluates the correctness and relevance of the generated answer.

Metrics: BLEU Score, ROUGE Score, Human Evaluation

Goal: To ensure that the generated text is accurate and contextually appropriate.

2. Latency

2.1 Retrieval Latency

Definition: The time taken to retrieve relevant documents.

Metrics: Average retrieval time in milliseconds

Goal: Reduce retrieval time to enhance user experience.

2.2 Generation Latency

Definition: The time taken to generate an answer after retrieving documents.

Metrics: Average generation time in milliseconds

Goal: Ensure fast answer generation to maintain user engagement.

3. User Engagement

3.1 Session Duration

Definition: The average time a user spends interacting with the product per session.

Metrics: Average session duration in minutes

Goal: Increase session duration to indicate higher user engagement.

3.2 Interaction Rate

Definition: The frequency of user interactions with the system.

Metrics: Number of interactions per session

Goal: Promote frequent interactions to increase user satisfaction.

4. User Satisfaction

4.1 User Feedback

Definition: Direct feedback from users regarding their experience.

Metrics: Net Promoter Score (NPS), Customer Satisfaction Score (CSAT)

Goal: Achieve high satisfaction scores to ensure users have a positive experience.

4.2 Error Rate

Definition: The frequency of errors encountered by users.

Metrics: Number of errors per 100 interactions

Goal: Reduce error rate to improve user trust and reliability.

5. Scalability

5.1 System Throughput

Definition: The number of queries the system can handle per second.

Metrics: Queries per second (QPS)

Goal: Ensure the system can handle high loads efficiently.

5.2 Resource Utilization

Definition: The efficiency of resource usage by the system.

Metrics: CPU and memory utilization

Goal: Optimize resource usage to reduce costs and improve efficiency.

6. Business Impact

6.1 Conversion Rate

Definition: The percentage of users who perform a desired action (e.g., purchase, registration).

Metrics: Percentage conversion rate

Goal: Increase conversion rate to drive business growth.

6.2 Return on Investment (ROI)

Definition: The financial return generated by the product relative to its cost.

Metrics: Percentage ROI

Goal: Ensure the product delivers a positive financial return.

Summary

Tracking these KPIs will provide a comprehensive understanding of the performance and success of the LLM RAG product. By focusing on accuracy, latency, user engagement, user satisfaction, scalability, and business impact, stakeholders can make data-driven decisions to improve the product and achieve strategic objectives. Regularly reviewing and improving these KPIs will help maintain alignment with evolving business goals and user needs.

If you are interested in developing and evaluating high-performance RAG products, iApp Technology offers a cutting-edge RAG LLM platform called Chinda, available for your use. https://chinda.iapp.co.th