Introducing GPT-OSS-120B and GPT-OSS-20B with OpenAI Harmony Format Support

OpenAI has returned to open-source AI development with the release of two powerful new models: GPT-OSS-120B and GPT-OSS-20B. These open-weight reasoning models are available under the Apache 2.0 license and bring significant advancements in reasoning capabilities, along with native support for the OpenAI Harmony response format.

What Are GPT-OSS Models?

GPT-OSS models represent OpenAI's return to open-source development, featuring advanced Mixture-of-Experts (MoE) architecture designed to provide enterprise-grade reasoning performance while maintaining efficiency. Released in August 2025 under the Apache 2.0 license, these models offer different performance-efficiency trade-offs optimized for various deployment scenarios from enterprise servers to consumer devices.

GPT-OSS-120B: Enterprise-Scale Performance

The 117-billion parameter model (116.8B total with 5.1B active parameters per token) delivers:

- Advanced Reasoning: Outperforms OpenAI o3-mini and matches o4-mini on competition coding (Codeforces) and problem solving (MMLU)

- MoE Architecture: Mixture-of-Experts design with alternating dense and locally banded sparse attention patterns

- Long Context Windows: Native support for up to 128k context length with Rotary Positional Embedding (RoPE)

- Enterprise Integration: Optimized for reasoning, agentic tasks, and developer use cases

- MXFP4 Quantization: MoE weights quantized to MXFP4 format, enabling deployment on single 80GB GPU

GPT-OSS-20B: Efficient and Accessible

The 21-billion parameter model (20.9B total with 3.6B active parameters per token) focuses on:

- Consumer Hardware: Runs on laptops and Apple Silicon devices with only 16GB memory

- Competitive Performance: Matches or exceeds o3-mini despite smaller size, outperforming on mathematics

- Edge Deployment: Perfect for on-device applications and consumer hardware

- MXFP4 Efficiency: Requires only 16GB of memory thanks to quantized MoE weights

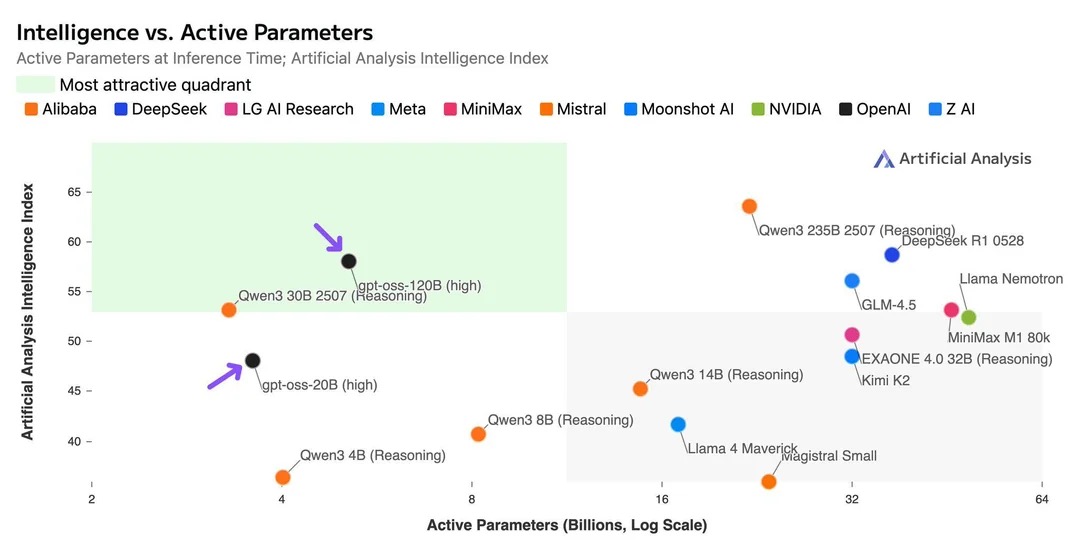

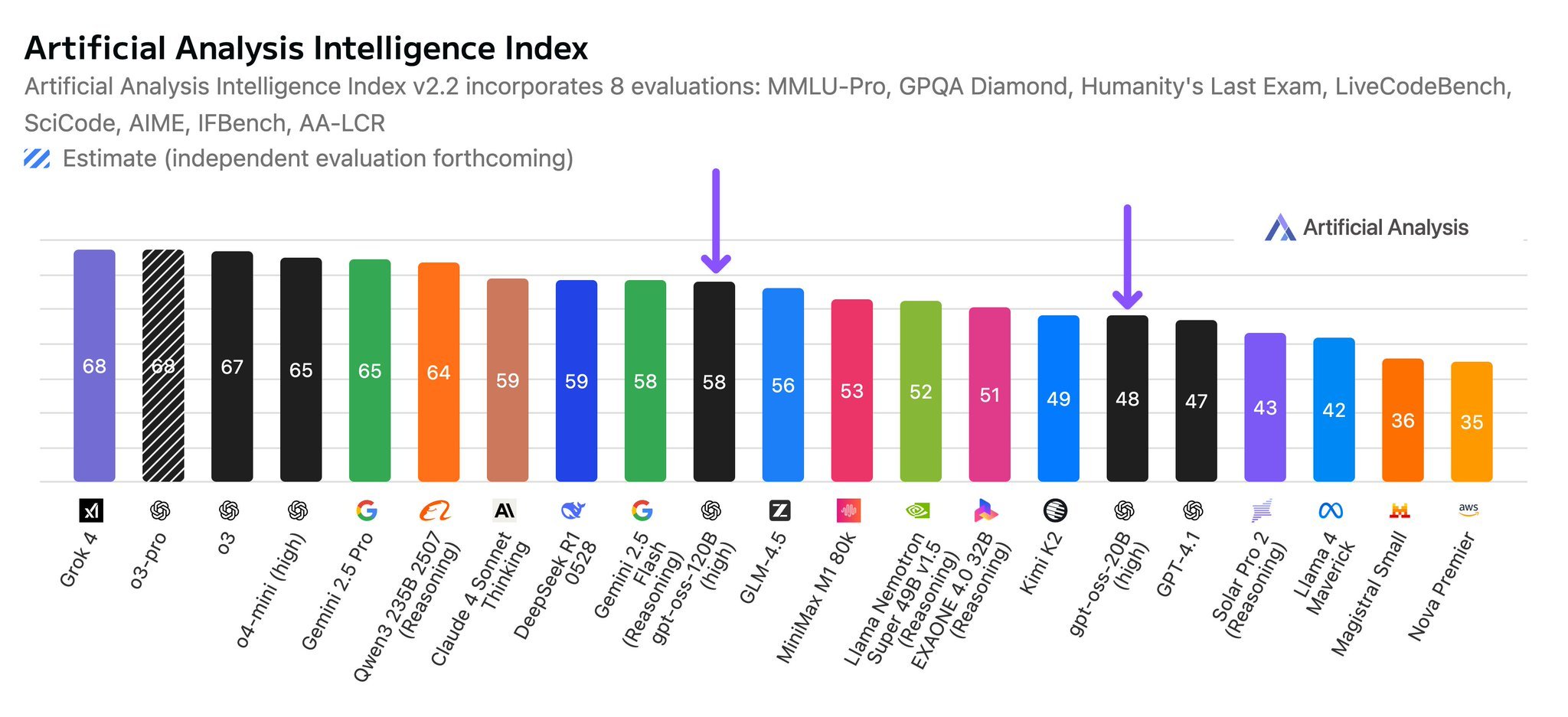

Performance Benchmarks

Both GPT-OSS models demonstrate exceptional performance across various evaluation metrics, establishing them as highly competitive options in the open-source AI landscape.

Benchmark Results

The benchmark results show that:

- GPT-OSS-120B outperforms OpenAI o3-mini and matches o4-mini on competition coding and general problem solving

- GPT-OSS-20B matches or exceeds o3-mini performance despite its smaller size, particularly excelling in mathematics

- Both models demonstrate strong performance across diverse evaluation categories including reasoning, coding, and knowledge tasks

- The models maintain competitive performance while offering the advantages of open-source accessibility and on-premise deployment

These results position GPT-OSS models as powerful alternatives to proprietary solutions, especially for organizations requiring high-performance AI capabilities with full control over their deployment environment.

OpenAI Harmony Format: Structured Conversations

Both models feature native support for the OpenAI Harmony response format, bringing several key advantages:

Multi-Channel Communication

The Harmony format organizes responses across three distinct channels:

- Final Channel: User-facing responses that provide clear, actionable information

- Analysis Channel: Internal reasoning and chain-of-thought processes

- Commentary Channel: Function calls, tool usage, and implementation details

Role-Based Hierarchy

Five defined roles create clear information hierarchy:

- System: Highest priority configuration and constraints

- Developer: Implementation guidance and technical specifications

- User: End-user requests and requirements

- Assistant: AI-generated responses and reasoning

- Tool: External function calls and data retrieval

Enhanced Reasoning Capabilities

The format supports multiple reasoning effort levels:

- Low Effort: Quick responses for simple queries

- Medium Effort: Balanced reasoning for standard tasks

- High Effort: Deep analysis for complex problem-solving

Practical Applications

Enterprise Document Processing

<|channel|>analysis<|message|>

User requests quarterly financial report processing. Need to analyze document structure, identify key financial metrics, cross-reference with historical data for context and trends.

<|end|>

<|start|>assistant<|channel|>final<|message|>

The Q3 financial report shows 15% revenue growth with improved margins in the AI services division.

<|return|>

Multilingual Customer Support

The models excel at providing structured support across languages, maintaining consistency while adapting to cultural contexts.

Tool Calling and Function Integration

The Harmony format excels at structured tool calling and function integration:

<|channel|>analysis<|message|>

User wants weather information for Tokyo. Need to call weather API, parse response, and format for user.

<|end|>

<|start|>assistant<|channel|>commentary<|message|>

Calling weather_api(location="Tokyo", units="celsius")

<|end|>

<|start|>tool<|channel|>commentary<|message|>

{"temperature": 24, "condition": "sunny", "humidity": 65, "wind_speed": 12}

<|end|>

<|start|>assistant<|channel|>final<|message|>

The weather in Tokyo is currently 24°C and sunny, with 65% humidity and wind speed of 12 km/h.

<|return|>

Research and Development

The analysis channel provides transparency into the model's reasoning process, crucial for research applications and model interpretability.

Implementation Considerations

Infrastructure Requirements

- GPT-OSS-120B: Runs on single 80GB GPU (H100, A100, or AMD MI300X) thanks to MXFP4 quantization of MoE weights

- GPT-OSS-20B: Requires only 16GB of memory, suitable for consumer hardware, laptops, and mobile devices

Integration Strategies

Both models support standard inference APIs while providing enhanced capabilities through Harmony format integration. Key technical features include:

- Transformer Architecture: Leverage grouped multi-query attention with group size of 8 for memory efficiency

- MoE Design: Each model uses mixture-of-experts to reduce active parameters per token

- Attention Patterns: Alternating dense and locally banded sparse attention, similar to GPT-3

- Position Encoding: Rotary Positional Embedding (RoPE) for improved sequence understanding

Organizations can:

- Start with existing implementations using standard format

- Gradually adopt Harmony features for enhanced functionality

- Leverage analysis channels for debugging and optimization

Future Implications

The combination of powerful open-source models with structured conversation formats represents a significant step toward more transparent and controllable AI systems. The Harmony format's role-based approach and multi-channel communication enable:

- Improved Debugging: Clear separation of reasoning and output

- Better Monitoring: Structured logs for system analysis

- Enhanced Control: Fine-grained management of AI behavior

- Transparency: Visible reasoning processes for critical applications

Getting Started

Organizations interested in implementing GPT-OSS models with Harmony format support should consider:

- Assessment: Evaluate computational requirements and use cases

- Pilot Testing: Start with GPT-OSS-20B for initial exploration

- Integration Planning: Design systems to leverage multi-channel communication

- Training: Prepare teams for Harmony format implementation

The release of GPT-OSS-120B and GPT-OSS-20B under the Apache 2.0 license with OpenAI Harmony format support marks OpenAI's significant return to open-source AI development. These models offer organizations powerful tools for building transparent, controllable, and effective reasoning solutions that can run on everything from enterprise servers to consumer laptops.

Contact us to learn how iApp Technology can help implement these advanced models in your organization's AI infrastructure.